How Explainable AI (XAI) Is Shaping the Next Frontier for Trustworthy Research & Industry in 2026?

Kenfra Research - Bavithra2025-10-31T17:27:41+05:30Artificial Intelligence has reached a turning point. After years of focusing mainly on raw performance, bigger models, faster training, and higher accuracy, the global conversation is shifting. The new question isn’t how smart an AI system can get, but how much we can trust it. This is where Explainable AI (XAI) is quietly reshaping the landscape for both research and industry as we head into 2026.

XAI offers a path forward, bringing clarity and accountability to the way machines think and make decisions.

What is Explainable AI (XAI)?

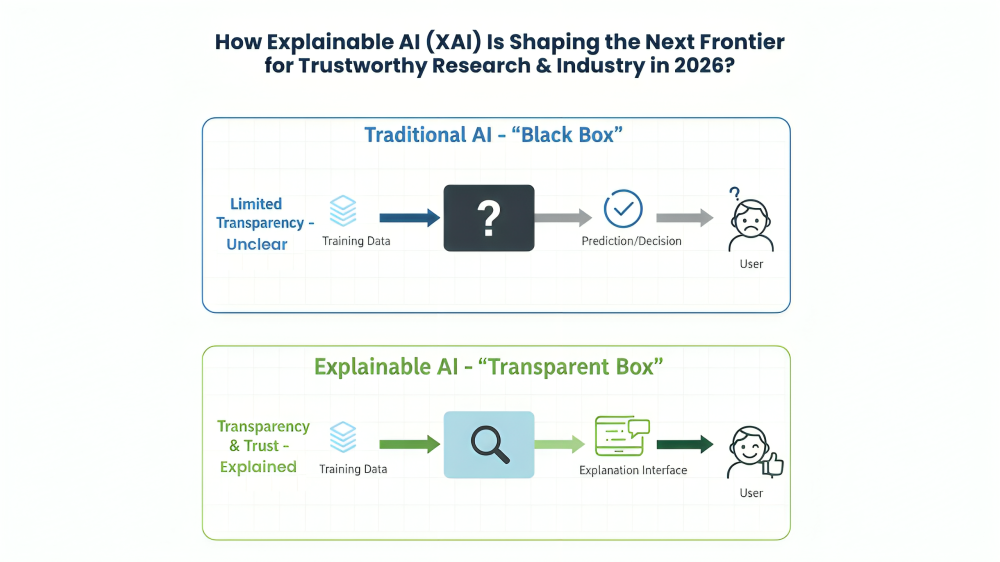

Explainable AI, often called XAI, means artificial intelligence systems that can explain how and why they make decisions. In simple words, it’s about making AI understandable to humans. Instead of acting like a black box, XAI allows users, scientists, doctors, or business leaders to see the reasoning behind an AI’s result.

As we step into 2026, XAI is shaping the next frontier for trustworthy research and industry. It’s helping people regain trust in technology by adding clarity and accountability to AI decisions.

Why Explainable AI Matters Today?

In the past, AI models were focused solely on speed and accuracy. Most companies used the latest AI technology of 2025, such as GPT-5 and other large models, to improve productivity or make predictions. But there was one big problem: no one really knew how these systems reached their answers.

Now, research shows that AI is no longer just about performance; transparency, interpretability, and trust are increasingly required. If an AI system is going to help diagnose a disease or approve a bank loan, people need to know why it made that choice. That’s what explainable AI provides: clarity, fairness, and confidence.

Applications of Explainable AI (XAI)

Explainable AI isn’t just a research idea it’s already being used in many industries that need both accuracy and trust. Here are the main applications of XAI and how they work in real life:

1. Healthcare

In hospitals, AI helps doctors detect diseases, read X-rays, and suggest treatments.

With Explainable AI, doctors can see why the system recommended a specific diagnosis or medicine.

For example:

- It may highlight which part of an X-ray shows a tumour.

- It can explain how patient data like blood pressure, heart rate, or age influenced the decision.

This builds trust between doctors and technology and helps ensure patient safety.

It also allows healthcare teams to verify that AI models are not biased or making mistakes in sensitive cases.

2. Finance

Banks and insurance companies use AI for loan approvals, fraud detection, and credit scoring.

But customers and regulators now demand transparency.

Explainable AI systems can show:

- Why a loan was accepted or rejected

- Which factors (like income, credit score, or spending history) affected the decision

This makes financial decisions fairer and more transparent, reducing bias and complaints.

It also helps banks meet regulatory requirements for ethical AI use.

3. Education

In education, AI tools recommend learning materials, evaluate assignments, or help in student assessments.

Explainable AI helps teachers and students understand how the system graded or suggested something.

For instance, if an AI grading tool gives a low score, it can show which parts of an essay need improvement.

This ensures fairness, supports personalized learning, and helps students trust the process rather than doubt it.

4. Manufacturing and Industry

Factories now use AI to predict machine failures, manage supply chains, and improve product quality. With XAI, engineers can see why the system predicts that a machine might break down soon.

For example, it may show that a rise in vibration levels or temperature patterns triggered the alert. This helps companies fix problems faster, reduce downtime, and improve efficiency.

Explainable AI also supports safe automation, where human workers can understand and oversee AI-driven machinery.

The Rise of Explainable Human–AI Collaboration Models

Trustworthy AI is not achieved by algorithms alone it emerges when humans and machines collaborate with transparency and mutual understanding.

As we move into 2026, research is increasingly focused on explainable human–AI collaboration models systems designed so that humans and AI can make decisions together, learn from each other, and share accountability. These models move beyond automation to create a genuine partnership between human insight and machine intelligence.

Key collaboration approaches include:

- Co-pilot systems: AI works alongside professionals such as doctors, engineers, or data analysts offering real-time explanations for its recommendations so users can make informed choices.

- Hybrid intelligence: Integrates human intuition and ethical reasoning with AI’s analytical precision, producing more balanced and responsible outcomes.

- Interactive explainability dashboards: Provide visual representations of AI’s reasoning, allowing users to explore how conclusions were reached, question assumptions, and refine results.

These emerging frameworks show that explainability is not just a technical requirement but a human one. When people can understand and trust AI’s reasoning, collaboration becomes safer, smarter, and more ethical paving the way for a new era of responsible innovation.

Case Studies: XAI in Healthcare and Finance (2026)

By 2026, two sectors continue to lead the global adoption of Explainable AI (XAI) healthcare and finance. Both demand precision, accountability, and transparency, making them ideal proving grounds for trustworthy AI innovation.

Healthcare: From Black-Box Diagnosis to Transparent Care

AI models now assist in reading medical images, predicting disease risks, and recommending treatments. But doctors need to understand why an AI suggests a specific action.

With XAI:

- Medical imaging systems can highlight which regions of an X-ray indicate potential tumours.

- Predictive models can explain how patient data (e.g., blood pressure, heart rate, or age) affected risk assessments.

This transparency builds clinical trust, enhances patient safety, and supports ethical medical AI a crucial step toward human–AI collaboration in hospitals.

Finance: Transparent Decision-Making in a Regulated World

Financial institutions use AI to detect fraud, assess credit risk, and automate loan approvals. However, regulators and consumers now demand visibility into these decisions.

With Explainable AI:

- Algorithms can show which factors (like credit history, income, or spending patterns) influenced approval or rejection.

- Banks can audit and justify outcomes for compliance and fairness.

As a result, XAI in finance not only improves decision accuracy but also reduces bias, complaints, and regulatory risk.

Challenges & Open Research Gaps in Explainable and Trustworthy AI

Despite rapid progress, several open research gaps remain for the next generation of scholars and innovators.

As we move into 2026, PhD researchers in AI and data science are focusing on critical challenges such as:

- Balancing performance and interpretability: Making deep neural networks both powerful and understandable.

- Standardizing explainability metrics: Creating universal benchmarks to measure what “explainable” really means.

- Human factors in XAI: Understanding how people interpret explanations and how this affects decision-making.

- Cross-domain explainability: Extending XAI to multimodal models (text, vision, speech) used in complex systems like autonomous vehicles and generative AI.

- Policy and governance: Designing frameworks for ethical AI regulation and transparent auditing.

These challenges mark the research frontier of trustworthy AI where explainability will shape not just products, but the scientific principles that guide AI development.

Conclusion

As we step into 2026, Explainable AI is no longer just an academic pursuit it’s the foundation of a trustworthy digital future. By opening the “black box” of machine learning, XAI empowers humans to understand, question, and refine the systems that increasingly shape our world. Whether it’s a doctor interpreting an AI-assisted diagnosis, a banker reviewing a credit decision, or a researcher developing the next-generation model, explainability bridges the gap between intelligence and integrity.

At Kenfra Research, we support PhD scholars shaping the next frontier of trustworthy AI and data-driven innovation with expert guidance from topic selection to advanced plagiarism checking.